Manual testing part-3 test cases writing and Software test execution and reporting

Cumming to software testing software test planning and software test design will be done.

SOFTWARE TEST PLANNING : Here PM can select test lead and this test lead will take care of

- Team formation

- Identifying tactical risks

- Preparing test plan in IEEE 829 format and

- Review of test plan

SOFTWARE TEST DESIGN : When test plan document was base lined, Corresponding testers can start test design for responsible modules and testing topics.

- UNDERSTANDING REQUIREMENTS : In general every test engineer can study complete srs by following below three models :

- By getting training from SME(subject matter expert)

- Getting training from SA

- Study SRS and get clarity on doubts from SA

- Study SRS and go for knowledge transfer.

2 . PREPARING TEST SCENARIOS AND CASES : After completion of all requirements understanding testers can prepare test scenarios and cases for selected requirements.

Test scenario means an activity to be tested in a module or a requirement in a software build.

Every test scenario consists of multiple conditions. Each testable condition is called as TEST CASE.

So one software consists of multiple requirements and one requirement consists of multiple cases.

To write test scenarios and cases for selected requirements and testing topics , testers can use below four methods :

- Functional specifications based test design.

- Use cases based test design.

- Screens based test design and

- Non functional specifications based test design .

Case study 1 : ONLINE INSURANCE SYSTEM :

Functional specification 1 : From insurance company requirement OIS (online insurance site ) home page is allowing insurance agents for login. Here agent can enter user ID and password . User ID is taking alphanumerics in lowercase from 4 to 16 positions long. password object is taking alphabets in lowercase from 4 to 8 characters. agent can click submit button to get next page for valid user and error message for invalid data.

Test Scenario 1 : Validate user ID field in login page .

Test Cases :

BVA (boundary value analysis ) for size

Minimum size =4 positions - valid

min +1 =5 positions - valid

min-1 =3 positions - invalid

Maximum size=16 positions-valid

Max-1 =15 positions - valid

Max+1 =17 positions - invalid

ECP TYPE ( equivalence classes partitions )

valid

|

invalid

|

[a-z0-9]

|

[A-Z]

Blank field

Special characters

|

This is the way we write test cases for a scenario in BVA we validate number of positions and in ECP we validate what are valid and what are in valid. In ECP we have used regular expressions. You can learn regular expressions in net . As you can see in valid field it is alphanumerics wit lower case are valid..

Test scenario 2 : Validate password field in login page .

Test cases: BVA

min = 4 positions - valid

min-1= 3 positions - invalid

min+1=5 positions - valid

max = 8 positions - valid

max-1=7 positions - valid

max+1=9 positions-invalid

ECP

Valid

|

Invalid

|

[a-b]

|

[A-Z0-9]

Special characters

Blank field

|

Test scenario 3 : Validate login operation by clicking submit button.

Test cases : Decision table

User ID

|

Password

|

Expected output after clicking submit button

|

Valid User ID w.r.t Data base

|

Valid password w.r.t DB

|

Next page

|

Valid user ID w.r.t DB

|

Invalid password w.r.t DB

|

Error message

|

Invalid user ID w.r.t DB

|

Valid password w.r.t DB

|

Error message

|

Blank field

|

Valid password w.r.t DB

|

Error message

|

Valid user ID w.r.t DB

|

Blank field

|

Error message

|

This is how we write scenarios and test cases.we have to write test scenarios in MS office and test cases in MS Excel .And test date with sample data to test cases in MS Excel.

I have done a manual project on online banking site. If any one have any doubt regarding test cases writing and test data writing u can mail me i will send my project copy .

This is how we get functional specifications based test design.

USE CASES BASED TEST DESIGN : In use case based test design we get more detailed information . We get detailed document information , flow diagrams screen shorts or prototypes.

SCREENS BASED TEST DESIGN : In screens based test design we just get a screen short of the design and we have to write test cases and data . This a bit difficult task

NON FUNCTIONAL SPECIFICATION BASED TEST DESIGN : For functional test design testers can follow previously discussed 3 methods and use black box testing Technics . But for non functional test design testers can follow only one method with out any specific Technic.

USABILITY TEST SCENARIOS/CASES : We are taking an online bank site as an example and perform this tests on it.From banking people expectations OBS site pages are user friendly. Test scenarios and cases are same for usability testing.

TEST

SCENARIO/CASE 1: Validate spelling check of labels

throughout the pages of OBS site.

TEST

SCENARIO/CASE 2: Validate “Init cap” of labels

throughout the pages of OBS site.

TEST

SCENARIO/CASE 3: Validate meaning of labels throughout

the pages of OBS site.

TEST

SCENARIO/CASE 4: Validate line spacing gap in between

label and object throughout pages of OBS site.

TEST

SCENARIO/CASE 5: Validate line spacing gap between

object and object throughout the pages of OBS site.

TEST

SCENARIO/CASE 6: Validate alignment of object throughout

the page OBS site.

TEST

SCENARIO/CASE 7: Validate position of functionality

related objects in throughout the pages of OBS site.

TEST

SCENARIO/CASE 8: Validate frames if available throughout

the pages of OBS site.

TEST

SCENARIO/CASE 9: Validate ‘OK’ and ‘CANCEL’ like buttons

throughout the pages of OBS site.

TEST

SCENARIO/CASE 10: Validate the existence of system menu

for throughout pages of OBS site.

TEST

SCENARIO/CASE 11: Validate icon symbols w.r.t

functionality mapping throughout pages of OBS site.

TEST

SCENARIO/CASE 12: Validate icon symbol and tool tip

message mapping throughout pages of OBS site.

TEST

SCENARIO/CASE 13: Validate date format visibility for all

date objects throughout the pages of OBS site.

TEST

SCENARIO/CASE 14: Validate keyboard access on all objects

throughout the pages of OBS site.

TEST

SCENARIO/CASE 15: Validate meaning of error message

coming throughout the pages of OBS site.

TEST

SCENARIO/CASE 16: Validate labels font size uniformness

throughout the pages of OBS site.

TEST

SCENARIO/CASE 17: Validate labels font style uniformness

throughout the pages of OBS site.

TEST

SCENARIO/CASE 18: Validate labels color contrast

uniformness throughout the pages of OBS site.

TEST

SCENARIO/CASE 19: Validate the existence of status bar or

progress bar controls throughout the pages of OBS site.

TEST

SCENARIO/CASE 20: Validate help message w.r.t

functionality throughout the pages of OBS site.

Usability testing has least preference

COMPATIBILITY TESTING :

NON FUNCTIONAL SPECIFICATIONS : From bank people expectations OBS site will run in internet.Here bank server computer existing operating system in windows 2003 server/windows 2008 server/Linux red hat server. Public Clint systems are having windows xp or windows 7 or windows 8 as operating system. While launching OBS site, Public can use a browser like internet explorer or Google chrome or Mozilla Firefox or safari or net cafe or opera.

TEST SCENARIO : Validate login operation of OBS site in customer expected platforms.

TEST CASES : Compatibility testing :

Usability testing has least preference

COMPATIBILITY TESTING :

NON FUNCTIONAL SPECIFICATIONS : From bank people expectations OBS site will run in internet.Here bank server computer existing operating system in windows 2003 server/windows 2008 server/Linux red hat server. Public Clint systems are having windows xp or windows 7 or windows 8 as operating system. While launching OBS site, Public can use a browser like internet explorer or Google chrome or Mozilla Firefox or safari or net cafe or opera.

TEST SCENARIO : Validate login operation of OBS site in customer expected platforms.

TEST CASES : Compatibility testing :

Platform

|

version

|

Yes/no

|

Server side operating system

|

Windows 2003

Windows 2008

Linux red hot

others

|

Yes

Yes

Yes

Yes/no

|

Clint side operating system

|

Windows xp

Windows vista

Windows 8

Windows 7

others

|

Yes

Yes

Yes

Yes

Yes/no

|

Clint side browser

|

Internet explorer

Google chrome

Safari

Mozilla fire fox

Opera

Nets cafe navigator

others

|

Yes

Yes

Yes

Yes

Yes

Yes

Yes/no

|

This is how we write test cases for compatibility testing and let us see hardware configuration now

HARDWARE CONFIGURATION TESTING : From banking people expectations OBS site will run

in internet. Internet is a formation of networks like bus topology, ring

topology, hub based topology. In mini statement operation users can use print

button to make statement printout via Inkjet or dot matrix or laser.

TEST SCENARIO : validate mini statement operation of obs site in customer expected environment site and test print option.

TEST CASES : Hardware configuration testing

TEST SCENARIO : validate mini statement operation of obs site in customer expected environment site and test print option.

TEST CASES : Hardware configuration testing

Hardware component

|

Type

|

Yes/no

|

Networks

|

Bus topology

Ring topology

Hub topology

Others

|

Yes

Yes

Yes

Yes/no

|

Printer

|

Ink jet

Dot matrix

Lascer

Others

|

Yes

Yes

Yes

Yes/no

|

PERFORMANCE TESTING :From banking people expectations OBS site can be

used by 2000 users at a time.

TEST SCENARIO : Validate login operation of OBS site in customer expected load levels environment.

TEST CASES : Performance testing / Ability matrix

TEST SCENARIO : Validate login operation of OBS site in customer expected load levels environment.

TEST CASES : Performance testing / Ability matrix

Load level

|

Expectations

|

2000 users

|

Speed in process

|

> 2000 users by increasing level by level

|

To find out Peak load/load capacity

|

>>>2000 users (huge load suddenly)

|

To find out server crashing point

|

2000 users (continuously for long time)

|

To find out memory leakage

|

This is how we do performance testing for a scenario

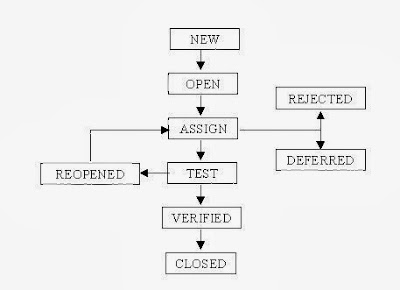

BUG LIFE CYCLE / DEFECT LIFE CYCLE :

BUG LIFE CYCLE / DEFECT LIFE CYCLE :

|

| Bug life cycle |

Explanation for bug life cycle: When a new defect is found by the tester its status will be new and sent to developer, if developer accepts the defect then it is called a bug. And its status will be changed to open .if it is rejected by developer then it's status will be rejected and if developer accepts it but it's severity is low then it's status will be kept as differed.When the developer removes the defect its status will be kept as FIX and again sent to tester and if tester again finds a defects he will put its status as REOPEN and send back to developer,if there is no defect then its status will be kept as VERIFIED and then closed .

Levels of test execution :

Above mentioned are levels of text execution:

Smoke testing is mandatory on every software build version. Real testing will be conducted on every software build except on the final software build . Retest , sanity test and regression test levels will be coming on every s/w build except 1st version. Final regression testing will come on final s/w build only.

The combination of test environment and testers prepared documents is called as test bed or test hardness.

Smoke testing : After receiving s/w build from developers, testing team can gather at one cabin and execute a fixed set of cases to conform that s/w build is testable or not. If any s/w build is not working they will be sent back to developer.

Real testing : When s/w build is working corresponding testers can downlode corresponding s/w build into their cabins to test responsible modules by excicuting all test cases one by one.

When a defect is found it is sent to defect tracking team. Accepted defect will be fixed by developer and sent back to tester.Then again smoke testing will be done.

Retest, sanity test and regression test : Here tester will test if the bug is cleared or not if any other module is affected by fixing the previous bug and if the whole project is working properly or not.

SEVERITY : Seriousness of defect with respect to s/w build functionality.

PRIORITY : Impotence of defect fixing with respect to customer .

Defect is called as issue in US and incident in UK.

If there is any defect that should be reported to defect tracking team in IEEE 829 format in Excel sheet. It should contain below mentioned list :

- Defect ID :Unique number or name

- Defect description : About defect

- Build version : Version number of build

- Feature or module : Name of module in which defect was found.

- Failed scenario and cases : Test cases document ID , during which cases were failed.

- Severity : The seriousness of defect w.r.t to s/w build functionality ( High, medium, low )

- Priority : Importance of defect fixing w.r.t customer .( High , medium , low)

- Reproducible : Whether the defect is appearing always or rearly

- Attachments: Screen shots of defects if required

- status : Status of the defect weather it is open or reopen or etc

- Detected by (name ) : Name of tester

- Detected on : Date of detection

- Assigned to : Reporting to DT

- Test environment : Details of system in which system defect was detected

- Suggested fix : Suggestions to fix the defect (optional).

TESTER RESPONSIBILITIES :

- Writing scenarios

- Mapping scenarios to requirements

- Writing test cases for each scenario

- Attach test data to scenarios

- Executing test cases

- Reporting defect.

Comments

Post a Comment